Graphic Designers Computer Interface Design Nuclear Power Plant Human Face

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

7

Defining Requirements and Design

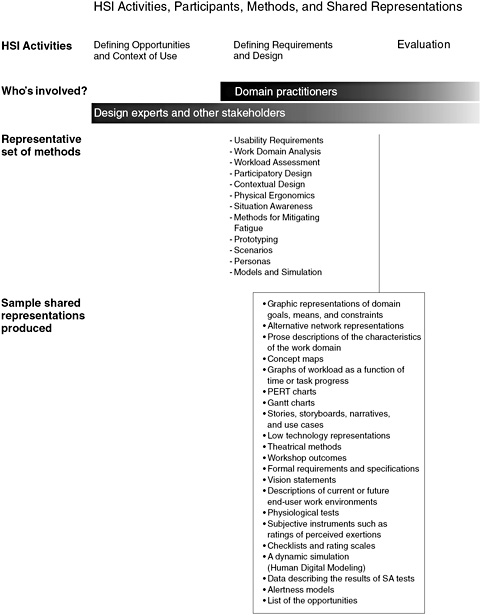

Design is fundamentally an innovative process. The methods discussed in this chapter are intended to support identification and exploration of design alternatives to meet the requirements revealed by analyses of opportunity space and context of use. The methods are not a substitute for creativity or inventiveness. Rather they provide a structure and context in which innovation can take place. We begin with a discussion of the need for and the methods used to establish requirements based on the concept of user-centered design. The types of methods included here are work domain analysis, workload assessment, situation awareness assessment, participatory design; contextual design; physical ergonomics; methods for analyzing and mitigating fatigue, and the use of prototyping, scenarios, persona, and models and simulations. As with the descriptions in Chapter 6, each type of method is described in terms of uses, shared representations, contributions to the system design phases, and strengths, limitations, and gaps. These methods are grouped under design because their major contributions are made in the design phase; however, it is important to note that they are also used in defining the context of use and in evaluating design outcomes as part of system operation. Figure 7-1 provides an overview.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

FIGURE 7-1 Representative methods and sample shared representations for defining requirements and design.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

USABILITY REQUIREMENTS

Overview

Inadequate user requirements are a major contributor to project failure. The most recent CHAOS report by the Standish Group (2006), which analyzes the reasons for technology project failure in the United States, found that only 34 percent of projects were successful; 15 percent completely failed and 51 percent were only partially successful.

Five of the eight (highlighted below) most frequently cited causes of failure were poor user requirements:

-

13.1 percent, incomplete requirements

-

12.4 percent, lack of user involvement

-

10.6 percent, inadequate resources

-

9.9 percent, unrealistic user expectations

-

9.3 percent, lack of management support

-

8.7 percent, requirements keep changing

-

8.1 percent, inadequate planning

-

7.5 percent, system no longer needed

Among the main reasons for poor user requirements are (1) an inadequate understanding of the intended users and the context of use, and (2) vague usability requirements, such as "the system must be intuitive to use."

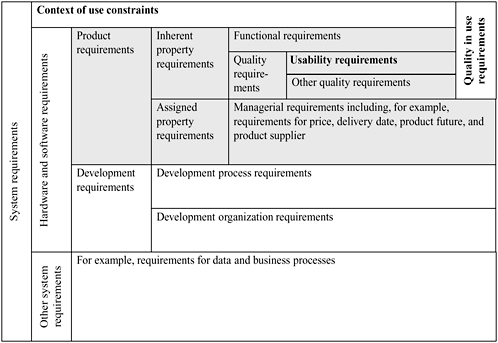

Figure 7-2 shows how usability requirements relate to other system requirements. Usability requirements can be seen from two perspectives: characteristics designed into the product and the extent to which the product meets user needs (quality in use requirements).

There are two types of usability requirements. Usability as a product quality characteristic is primarily concerned with ease of use. ISO/IEC 9126-1 (International Organization for Standardization, 2001) defines usability in terms of understandability, learnability, operability, and attractiveness. There are numerous sources of guidance on designing user interface characteristics that achieve these objectives (see the section on guidelines and style guides under usability evaluation). While designing to conform to guidelines will generally improve an interface, usability guidelines are not sufficiently specific to constitute requirements that can be easily verified. Style guides are more precise and are valuable in achieving consistency across screen designs produced by different developers. A style guide tailored to project needs should form part of the detailed usability requirements.

At a more strategic level, usability is the extent to which the product

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

FIGURE 7-2 Classification of requirements.

SOURCE: Adapted from ISO/IEC 25030 (International Organization for Standardization, 2007).

meets user needs. ISO 9241-11 (International Organization for Standardization, 1998) defines this as the extent to which a product is effective, efficient, and satisfying in a particular context of use. This high-level requirement is referred to in ISO software quality standards as "quality in use." It is determined not only by the ease of use, but also by the extent to which the functional properties and other quality characteristics meet user needs in a specific context of use.

In these terms, usability requirements are very closely linked to the success of the product.

-

Effectiveness is a measure of how well users can perform the job accurately and completely.

-

Efficiency is a measure of how quickly a user can perform work and is generally measured as task time, which is critical for productivity.

-

Satisfaction is the degree to which users like the product—a subjective response that includes the perceived ease of use and usefulness. Satisfaction is a success factor for any products with discretionary use, and essential to maintain workforce motivation.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Uses of Methods

Measures of effectiveness, efficiency, and satisfaction provide a basis for specifying concrete usability requirements.

Measure the Usability of an Existing System

If in doubt, the figures for an existing comparable system can be used as the minimum requirements for the new system. Evaluate the usability of the current system when carrying out key tasks, to obtain a baseline for the current system. The measures to be taken would typically include

-

success rate (percentage of tasks in which all business objectives are met).

-

mean time taken for each task.

-

mean satisfaction score using a questionnaire.

Specify Usability Requirements for the New System

Define the requirements for the new system, including the type of users, tasks, and working environment. Use the baseline usability results as a basis for establishing usability requirements. A simple requirement would be that when the same types of users carry out the same tasks, the success rate, task time, and user satisfaction should be at least as good as for the current system.

It is useful to establish a range of values, such as

-

the minimum to be achieved,

-

a realistic objective, and

-

the ideal objective (from a business or operational perspective).

It may also be appropriate to establish the usability objectives for learnability, for example, the duration of a course (or use of training materials) and the user performance and satisfaction expected both immediately after training and after a designated length of use.

It is also important to define any additional requirements for user performance and satisfaction related to users with disabilities (accessibility), critical business functions (safety), and use in different environments (universality).

Depending on the development environment, requirements may, for example, either be

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

-

iteratively elaborated as more information is obtained from usability activities, such as paper prototyping during development, or

-

agreed by all parties before development commences and subsequently modified only by mutual agreement.

Test Whether the Usability Requirements Have Been Achieved

Summative methods for measuring quality in use (see Chapter 8) can be used to evaluate whether the usability objectives have been achieved. If any of the measures fall below the minimum acceptable values, the potential risks associated with releasing the system before the usability has been improved should be assessed. The results can be used to prioritize future usability work in subsequent releases.

Shared Representations

The Common Industry Specification for Usability Requirements (Theofanos, 2006) provides a checklist and a format that can be used initially to support communication between the parties involved to obtain a better understanding of the usability requirements. When the requirements are more completely defined, it can be used as a formal specification of requirements. These requirements can subsequently be tested and verified.

The specification is in three parts:

-

The context of use: intended users, their goals and tasks, associated equipment, the physical and social environment in which the product will be used, and examples of scenarios of use. An incomplete understanding of the context of use is a frequent reason for partial or complete failure of a system when implemented. The context of use is composed of the characteristics of the users, their task, and the usage environment. There are several methods that can be used to obtain an adequate understanding of this type of information (see Chapter 6).

-

Usability measures: effectiveness, efficiency, and satisfaction measures for the main scenarios of use with target values when feasible.

-

The test method: the procedure to be used to test whether the usability requirements have been met and the context in which the measurements will be made. This provides a basis for testing and verification.

The context of use should always be specified. The importance of specifying criteria for usability measures (and an associated range of acceptable values) will depend on the potential risks and consequences of poor usability.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Communication Among Members of the Development Team

This information facilitates communication among the members of the development or supplier organization. It is important that all concerned groups in the supplier organization understand the usability requirements before design begins. Benefits include the following:

-

Reducing risk of product failure. Specifying performance and satisfaction criteria derived from existing or competitor systems greatly reduces the risk of product failure as a result of releasing a product that is inferior to existing or competitor systems.

-

Reducing the development effort. This information provides a mechanism for the various concerned groups in the customer's organization to consider all of the requirements before design begins and reduces later redesign, recoding, and retesting. Review of the requirements specified can reveal misunderstandings and inconsistencies early in the development cycle, when these issues are easier to correct.

-

Providing a basis for controlling costs. Identifying usability requirements reduces the risk of unplanned rework later in the development process.

-

Tracking evolving requirements by providing a format to document usability requirements.

Communication Between Customers and Suppliers

A customer organization can specify usability requirements to accurately describe what is needed. In this scenario, the information helps supplier organizations understand what the customer wants and supports the proactive collaboration between a supplier and a customer.

Specification of Requirements

When the product requirements are a matter for agreement between the supplier and the customer, the customer organization can specify one or more of the following:

-

intended context of use,

-

user performance and satisfaction criteria, and

-

test procedure.

The Common Industry Specification for Usability Requirements provides a baseline against which compliance can be measured.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Contributions to System Design Phases

Usability requirements should be integrated with other systems engineering activities. For example, the ISO/IEC 15288 standard (International Organization for Standardization, 2002) for system life-cycle processes includes the user-centered activities in the stakeholder requirements definition process as shown in Box 7-1.

BOX 7-1

User-Centered Activities for Stakeholder Requirements

-

Identify the individual stakeholders or stakeholder classes who have a legitimate interest in the system throughout its life cycle.

-

Elicit stakeholder requirements. Stakeholder requirements are expressed in terms of the needs, wants, desires, expectations, and perceived constraints of identified stakeholders.

-

Scenarios are used to analyze the operation of the system in its intended environment and to identify requirements that may not have been formally specified by any of the stakeholders, for example, legal, regulatory, and social obligations.

-

The context of use of the system is identified and analyzed. Included in the context analysis are the activities that users perform to achieve system objectives, the relevant characteristics of the end-users of the system (e.g., expected training, degree of fatigue), the physical environment (e.g., available light, temperature) and any equipment to be used (e.g., protective or communication equipment). The social and organizational influences on users that could affect system use or constrain its design are analyzed when applicable.

-

Identify the interaction between users and the system. Usability requirements are determined, establishing, as a minimum, the most effective, efficient, and reliable human performance and human-system interaction. When possible, applicable standards, for example ISO 9241 series, and accepted professional practices are used in order to define (1) physical, mental, and learned capabilities; (2) workplace, environment, and facilities, including other equipment in the context of use; (3) normal, unusual, and emergency conditions; and (4) operator and user recruitment, training, and culture.

-

Establish with stakeholders that their requirements are expressed correctly.

-

Define each function that the system is required to perform and how well the system, including its operators, is required to perform that function.

-

Define technical and quality in use measures that enable the assessment of technical achievement.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Strengths, Limitations, and Gaps

Establishing high-level usability requirements that can be tested provides the foundation for a mature approach to managing usability in the development process. But while procedures for establishing these requirements are relatively well established in standards, they are not widely applied or understood, and there is little guidance on how to establish more detailed user interface design requirements.

With most emphasis in industry on formative evaluation to improve usability, there is often a reluctance to invest in the summative evaluation in the final development of the project. Formal summative evaluation in terms of established usability criteria is needed to determine valid usability.

As much of systems development is carried out on a contractor-supplier basis (even if the supplier is internal to the customer organization), it is for the contractor to judge whether the investment in establishing and validating usability requirements is sufficient to justify the associated risk reduction.

Usability requirements can also provide significant benefits in clarifying user needs and providing explicit user-oriented goals for development, even if they cannot be exhaustively validated. If there are major usability problems, even the results from testing three to five participants would be likely to provide advance warning of a potential problem (for example, if none of the participants can complete the tasks, or if task times are twice as long as expected).

WORK DOMAIN ANALYSIS

Overview

Among the questions that arise when facing the design of a new system are the following: What functions will need to be accomplished? What will be automated, and what will be performed by people? If people will be involved, how many people will it take, and what will be their role? What information and controls should be made available, and how should they be presented to enhance performance? What training is required?

One approach to answering these questions is to start with a list of the tasks to be accomplished and perform task analyses to identify the sequence of actions entailed, the information and controls required to perform those actions, and the implications for number of people and training required. This approach works well when the tasks to be performed and conditions of use can be easily specified a priori (e.g., automated teller machines). However, in the case of highly complex systems (e.g., a process control

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

plant, a military command and control system) unanticipated situations and tasks inevitably arise.

Work domain analysis techniques have been developed to support analysis and design of these more complex systems, in which all possible tasks and situations cannot be defined a priori. Work domain analysis starts with a functional analysis of the work domain to derive the functions to be performed and the factors that can arise to complicate performance (Woods, 2003). The objective is to produce robust systems that enable humans to effectively operate in a variety of situations—both ones that have been anticipated by system designers and ones that are unforeseen (e.g., safely shutting down a process control plant with an unanticipated malfunction).

Work domain analysis methods grew out of an effort to design safer and more reliable nuclear power plants (Rasmussen, 1986; Rasmussen, Pejtersen, and Goodstein, 1994). Analysis of accidents revealed that operators in many cases were faced with situations that were not adequately supported by training, procedures, and displays because they had not been anticipated by the system designers. In those cases, operators had to compensate for information or resources that were inadequate in order to recover and control the system. This led Rasmussen and his colleagues to develop work domain analyses methods to support development of systems that are more resilient in the face of unanticipated situations.

A work domain analysis represents the goals, means, and constraints in a domain that define the boundaries within which people must reason and act. This provides the framework for identifying functions to be performed by humans (or machines) and the cognitive activities those entail. Displays can then be created to support those cognitive activities. The objective is to create displays and controls that support flexible adaptation by revealing domain goals, constraints, and affordances (i.e., allowing the users to "see" what needs to be done and what options are available for doing it).

A work domain analysis is usually conducted by creating an abstraction hierarchy according to the principles outlined by Rasmussen (1986). A multilevel goal-means representation is generated, with abstract system purposes at the top and concrete physical equipment that provides the specific means for achieving these system goals at the bottom. In many instances, the levels of the model include functional purpose (a description of system purposes); abstract function (a description of first principles and priorities); generalized function (a description of processes); physical function (a description of equipment capabilities); and physical form (a description of physical characteristics, such as size, shape, color, and location).

Work domain analyses do not depend on a particular knowledge acquisition method. Any of the knowledge acquisition techniques covered in Chapter 6 can be used to inform a work domain analysis. In turn, the

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

results of the work domain analysis provide the foundation for further analyses to inform human-system integration.

There are a growing number of HSI approaches that are grounded in a work domain analysis. A prominent example is cognitive work analysis (Rasmussen, 1986; Rasmussen et al., 1994; Vicente, 1999) that uses work domain analysis as the foundation for deriving implications for system design and related aspects of human-system integration, including function allocation, display design, team and organization design, and knowledge and skill training requirements. Burns and Hajdukiewicz (2004) provide design principles and examples of creating novel visualizations and support systems based on a work domain analysis.

Applied cognitive work analysis provides a step-by-step approach for performing and linking the results of a work domain analysis to the development of visualizations and decision-aiding concepts (Elm et al., 2003). These include

-

using a functional abstraction network to capture domain characteristics that define the problem space confronting domain practitioners.

-

overlaying cognitive work requirements on the functional model as a way of identifying the cognitive demands/tasks/decisions that arise in the domain and require support.

-

identifying information/relationship requirements needed to support the cognitive work identified in the previous step.

-

specifying representation design requirements that define how the information/relationships should be represented to practitioner(s) to most effectively support the cognitive work.

-

developing presentation design concepts that provide physical embodiments of the representations specified in the previous step (e.g., rapid prototypes that embody the display concepts).

Each design step produces a design artifact that collectively forms a continuous design thread providing a traceable link from cognitive analysis to design.

Work-centered design (Eggleston, 2003; Eggleston et al., 2005) is another example of an HSI approach that relies on a work domain analysis. Key elements of work-centered design include (a) analysis and modeling of the demands of work, (b) design of displays/visualizations that reveal domain constraints and affordances, and (c) use of work-centered evaluations that probe the ability of the resultant design to support work across a representative range of work context and complexities.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

![]()

FIGURE 7-3 Selected portions of a work domain representation for a pressurized water reactor nuclear power plant.

SOURCE: Roth et al. (2001). Used with permission of Lawrence Erlbaum Associates.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Shared Representations

The shared representation produced as output from a work domain analysis is typically a graphic representation of domain goals, means, and constraints. Figure 7-3 provides an example of a graphic work domain representation that was developed for a nuclear power plant design. The work domain representation specifies the primary goals of the plant (generate electricity and prevent radiation release), the major plant functions in support of those goals (Level 2 functions in the figure) and the plant processes available for performing the plant functions (Levels 3 and 4 in the figure). Level 4 specifies the major engineered control functions available for achieving plant goals. This is the level at which manual and automatic control actions can be specified to affect goal achievement.

While work domain analyses have often adopted Rasmussen's abstraction hierarchy formalism, the results of a work domain analysis can take multiple forms. These include alternative network representations (e.g., Elm et al., 2003), prose descriptions of the characteristics of the work domain, and concept maps.

Uses of Methods

Work domain analyses complement more traditional task analysis approaches. Traditional task analyses model how tasks in a domain are performed or should be performed. Work domain analyses model the problem space in which reasoning and action can take place. The work domain representation provides the basis for deriving the information required to enable domain practitioners to understand and reason about the domain at different levels of abstraction, ranging from domain purposes (e.g., prevent radiation release) all the way down to the particular physical systems (e.g., pumps and valves) available for achieving the domain goals.

The output of a work domain analysis is used to inform further analyses that feed different elements of human-system integration. Table 7-1 provides a summary of the major elements of a cognitive work analysis that provide traceable links between the results of the work domain analysis and implications for system design, including function allocation decisions, team and organization design, design of physical and information systems including displays, personnel selection and training, development of procedures, specification of test cases to drive system evaluation, and conduct of human reliability analyses as part of risk-based analyses.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

TABLE 7-1 Analytic Tools Involved in the Cognitive Work Analysis Methodology

| Phases of Cognitive Work Analysis | Description |

| Work domain analysis | Analyzes the purposes and physical context in which domain practitioners operate. This includes a description of domain goals, means available for achieving those goals, and constraints (e.g., physical constraints, sociopolitical constraints). |

| Control task analysis | Identifies what needs to be done in a work domain. This includes a description of the work situations that can arise and the work functions that need to be performed, independent of who (person or machine) will perform them or the detailed strategies to be used. |

| Strategies analysis | Analysis of strategies for making decisions and carrying out tasks, independent of who will carry them out. |

| Social organization and cooperation analysis | Focuses on who can carry out the work, how it can be distributed or shared, and how it can be coordinated. This includes allocation of work among individuals and/or machines, organization of individuals into teams and larger organizational units, and communication and coordination requirements. |

| Worker competencies analysis | Analysis of perceptual and cognitive requirements of workers (e.g., skills, knowledge, attitudes) to foster understanding and reduce workload. |

Use of Work Domain Analysis in the Port Security Case Study

Work domain analysis has been an integral part of the port security HSI work described in Chapter 5. One recent application involved determining potential technology insertion points for cargo screening at seaports where containers move directly from ship to rail, without exiting through a truck gate. In order to evaluate this domain comprehensively, interviews were conducted with terminal operations managers, physical site maps were collected, and terminal operations walkthroughs were conducted. The information was synthesized into descriptions of current operations at each of the terminals and rail yards, with a focus on identifying common and contrasting operational practices, speed of operations, overall time requirements for ship servicing, dwell time of containers in storage stacks, labor and equipment requirements, potential radiation portal screening choke points, and issues related to the operational impact of screening at these locations. The findings were used to define screening concepts that would maximize threat detection while minimizing impact on commerce.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Other Example Applications

One of the strengths of work domain analysis methods is their ability to drive the design of novel visualizations tailored to the demands of the work (Burns and Hazdukiewicz, 2004). Successful applications range from process control (Roth et al., 2001; Jamieson and Vicente, 2001), to aircraft displays (Dinadis and Vicente, 1999), to medical device applications (Lin, Vicent, and Doyle, 2001), to military command and control (Martinez, Bennett, and Shattuck, 2001; Potter et al., 2002), to network management (Duez and Vicente, 2005; Burns et al., 2000), and to defense against cyber war (Gualtieri and Elm, 2002). In each case, the approach yielded novel decision support concepts that were fine-tuned to the cognitive work requirements of the domain and markedly different from traditional displays in the domain.

One example drawn from a process control application is a large wall-mounted group view display intended to enable power plant control room teams to maintain broad situation awareness of the status of the plant. The goal was to increase the ability of operators to quickly assess plant state and effectively control the plant in both normal and abnormal condition.

The content and organization of the group view display was based on a work domain analysis (see Figure 7-4). The group view display was organized around the major plant functions that need to be achieved to maintain safety and power generation goals, and the physical processes that support them. The objective was to enable operators to rapidly assess whether the major plant functions are being achieved and the state of active plant processes that are supporting those plant functions. In cases of plant disturbances, in which one or more of the plant goals are violated, a functional representation allows them to assess what alternative means are available for achieving the plant goals.

A formal evaluation study demonstrated that the functionally organized overview display was more effective and was preferred by operators over a more conventional overview display that utilized a physical plant mimic as the organizational scheme. Teams performed significantly better with the functionally organized overview display than the more conventional physical mimic display in identifying target events (24-percent improvement) and diagnosing plant disturbances (27-percent improvement) (Roth et al., 2001).

The results illustrate the value of work domain analysis in deriving the critical goals, means, and constraints in the domain that impact decision making and in generating novel displays that effectively communicate these factors to support individuals and teams.

Work domain analyses promote design of novel visualizations that enable practitioners to readily apprehend and assimilate domain information

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

![]()

FIGURE 7-4 Schematic representation of a wall-mounted group view display for a compact power plant control room derived from a cognitive work analysis approach. SOURCE: Roth et al. (2001). Used with permission of Lawrence Erlbaum Associates.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

required to support complex decisions (Burns and Hazdukiewicz, 2004). One recent example is a work-centered support system visualization that was developed to support dynamic mission replanning in a military airlift organization (Roth et al., 2006). A work domain analysis identified domain factors that enter into and complicate airlift mission planning decisions, including the need to match loads to currently available aircraft, obtain diplomatic clearance for landings in and flights over foreign nations, balance competing airlift demands, and conform to airfield and aircrew constraints. Although existing information systems included all the relevant data, operational personnel had to navigate across multiple tabular displays to extract and mentally collate the necessary information. The work domain analysis provided the basis for design of a novel timeline display that enables operational personnel to graphically "see" the relationships between mission plan elements and resource constraints (e.g., airfield operating hours, durations of diplomatic clearances, crew rest requirements) to detect and address violations. A formal evaluation comparing performance with the timeline display to performance with the legacy system established significant improvement in performance with the timeline display (Roth et al., 2006).

Contributions to System Design Phases

A work domain analysis is usually performed at several levels of detail, depending on the stage of system development and complexity of the system being analyzed. A work domain analysis is performed as a preliminary analysis to identify information needs, critical constraints, and information relationships that are necessary for successful action and problem management within the domain. As the design evolves, the work domain analysis can be deepened and used to inform display design, function identification and allocation decisions, team and organization design, as well as identification of knowledge and skills (e.g., accurate system mental models) that are needed to effectively support performance in the domain.

The application of work domain analysis throughout the HSI design cycle has been successfully illustrated by Neelam Nakar and her colleagues, who have been applying work domain analysis and cognitive work analysis methods to the design of a first-of-a-kind Australian AWACS-style air defense platform called the Airborne Early Warning and Control (Naikar and Sanderson, 1999, 2001; Naikar et al., 2003; Naikar and Saunders, 2003; Sanderson et al., 1999; Sanderson, 2003). Their work has demonstrated the usefulness of work domain analysis throughout the system design cycle, including:

-

Evaluation of alternative platform design proposals offered by different vendors.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

-

Determination of the best crew composition for a new platform.

-

Definition of training and training simulator needs.

-

Assessment of risks associated with upgrading existing defense platforms.

Work domain analyses have been similarly successfully employed to provide early input into the HSI issues in a number of large-scale first-of-a-kind projects, including the design of a next-generation power plant (Roth et al., 2001); a next-generation U.S. navy battleship (Bisantz et al., 2003; Burns, Bisantz, and Roth, 2004), and a next-generation Canadian frigate (Burns, Bryant, and Chalmers, 2000).

Strengths, Limitations, and Gaps

A primary strength of work domain analysis is in emphasizing the importance of uncovering and representing domain characteristics and constraints that impact cognitive and collaborative work, as well as in guiding the design of systems that are fine-tuned to supporting the work demands and enabling domain practitioners to respond adaptively to a broad range of situations. It complements traditional sequential task analyses approaches by providing explicit shared representation of domain goals, characteristics, and constraints (Miller and Vicente, 1999; Bisantz et al., 2003).

A limitation of work domain analysis methods that is often pointed to is that it can be resource-intensive to exhaustively map the characteristics and constraints of a domain. However, as multiple projects have shown, it is not necessary to perform an exhaustive domain analysis to reap the benefits (e.g., Bisantz et al., 2003). A work domain analysis can be performed at different levels of detail, depending on the complexity of the system being analyzed and the phase of analysis. A preliminary, high-level work domain analysis can be performed early in the HSI process to identify information needs, critical constraints, and information relationships that are necessary for successful action and problem management in the domain. As the design evolves, the work domain analysis can be elaborated.

A related strength of work domain analysis methods is that it encourages explicit links between analysis and design via intermediate design artifacts. As the design evolves, these artifacts can be expanded and modified to provide a tracable link between domain demands, cognitive and performance requirements, and system features intended to provide the requisite support.

One of the current gaps that limit the impact of work domain analysis methods is the paucity of computational tools to facilitate analysis and serve as a core living repository of domain knowledge that could be drawn on throughout the system life cycle. While there has been some progress on

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

tool development, such as the work domain analysis workbench (Skilton, Cameron, and Sanderson, 1998) and the cognitive systems engineering tool for analysis tool, more comprehensive and robust tools are needed.

WORKLOAD ASSESSMENT

Overview

One of the most common issues that arise in complex system design is estimating whether the aggregate workload associated with the tasks assigned to system users will result in too much to do in the time available, leading to stress, unreliable performance, or, in some cases, system failure. Workload comes in different varieties and may be assessed from many different perspectives.

For tasks involving significant physical effort, physical workload is an ergonomic issue and in sustained task performance is usually measured in terms of oxygen consumption, or heart rate. Prediction of physical workload depends on having measurement results from other related activities and conditions and estimating the differences between the known results and the postulated activity. Guidelines are available to assess excessive physical workload.

Structurally, the human limbs and eyes can be directed to only one location at a time, and excessive workload can result from a requirement that they be directed to too many places for the time available or that they need to be in different places at the same time. Speech communication is similarly limited. Assessing this kind of structural interference requires estimates or measurements of the time required for the various activities required of the limbs, eyes, and voice in each task, laying them out in sequence, subject to temporal constraints, and evaluating the potential conflicts.

The most challenging evaluation is of mental workload. Humans can generally direct their attention to only one task or activity at a time. That is not to say that one cannot sometimes process, to some level of completeness, multiple streams of information, especially when they are coordinated or relate to the same task. There is a large literature on attention, attention management, and multitasking that is beyond the scope of this report (see, for example, Chaffin, Anderson, and Martin, 1999; Wickens and Hollands, 1999; and Charlton, 2002).

The distinctions among these types may become blurred. Thinking is often accompanied by visual exploration, and it is difficult to distinguish the structural constraint of where the eyes are looking from the mental load of reasoning about what is seen. Demanding physical effort may capture attention that could otherwise be directed to cognitive task performance.

Predicting mental workload has proved daunting, but there are some

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

modeling techniques that have been applied. Most depend on having the results of a detailed task analysis, requiring an understanding of the cognitive components of the task and estimates of the time that will be associated with each task element. McCracken and Aldrich (1984) defined the visual, auditory, cognitive, and perceptual-motor load associated with a collection of common elemental tasks, such as reading an instrument or operating a control. Then, after making corresponding estimates of the time required for each task element in context, they used task analysis results to bring together the elemental components into estimates of the aggregate loads as a function of time on each modality. This basic approach has also been used in a variety of modeling contexts, including network models and more detailed human performance simulations (Laughery and Corker, 1997).

When prediction is not possible or leads to uncertain results, it is necessary to undertake a study to estimate mental workload from actual measurements. There are fundamentally four kinds of measurements and analysis that have been used: (1) varying the task load corresponding to the range of expected task conditions (e.g., pace of input demand, such as air traffic load, or complexity of environment, such as urban versus rural road conditions) and evaluating the functional relation between task performance and task load; (2) introducing independent competing secondary tasks and measuring the quality of performance on the secondary task in the presence of the task under study; (3) asking the user to estimate perceived workload while performing the task or immediately afterward (i.e., subjective assessment, using tools such as the NASA TLX scales; Hart and Staveland, 1988); and (4) employing physiological measures, such as pupil diameter, eye-blink rate, evoked potential responses, or heart rate. There are numerous summary references that document these methods, such as Tsang and Wilson (1997) and Hancock and Desmond (2001).

Uses of Method

When individual tasks are time sensitive or when the system users are subjected to the demands of multitasking, excessive workload is one of the paramount issues that can degrade system performance. Whenever a new system is designed or revised, it is important to consider the impact of the design on user workload. Workload estimates are also needed in job design—the assembly of tasks into jobs. Workload is a key component in preparing estimates of needed manpower or, when there is a mandate to reduce staff, workload estimates are the most important consideration. Ultimately, workload is reflected in the personnel requirements forecast. It is an important area for coordination across the HSI domains.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

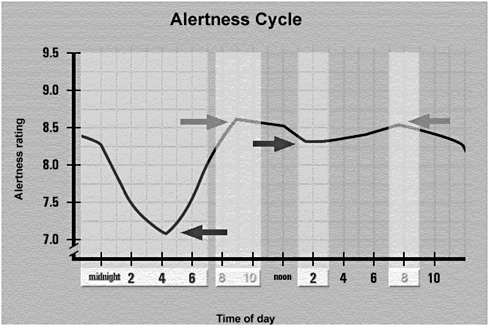

Shared Representations

The primary shared representations are graphs of workload as a function of time or task progress and PERT charts (a network diagram in which milestones are linked by tasks) or Gantt charts (bar charts that illustrate a project schedule). These shared representations illustrate the timelines of activities, showing where overlaps occur, with highlights showing phases in which the workload exceeds limits. For descriptions of these tools, see Modell (1996).

However, in most cases the output of studies assessing workload is expressed in an experiment report. Whenever possible the estimated workload should be compared with acceptable limits.

Contributions to System Design Phases

In a typical system design, consideration of workload begins with the initial task analysis and context of use assessment. In early stages, the estimates will be largely qualitative. The aspects of the design are identified that may be workload sensitive or where overload presents substantial task completion or safety risk. As the design matures, the workload estimates should become more quantitative, and confidence in the estimates will improve. When designs have reached the stage of completion in which a simulation of the task or of alternative task designs can be built, modeling studies or human-in-the-loop evaluations can be undertaken to estimate the workload of critical phases of the operation or critical elements of the system (see the section below on models and simulations). These studies will contribute to the manpower and personnel domains as well and should be coordinated with specialists in those areas. Measuring workload is also important during summative test and evaluation stages of a project.

Strengths, Limitations, and Gaps

The definition, measurement, and prediction of workload, particularly mental workload, has been on the human factors research agenda for more than 30 years. Measurement protocols and modeling approaches are available. It is much harder to define acceptable limits, because these are dependent on the measures used and there is no standardization of the measures, at least for mental workload. Using them requires the expertise of human factors professionals. All of the methods provide only approximate answers until the full system design is complete and the workload of using the real system can be evaluated.

Objective measures are usually to be preferred, but they require more effort to instrument and apply to simulated or real task performance. Subjective methods have been shown to be reliable if standardized question-

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

naires are used. Users can report only their perceptions and, under stressful conditions, perceived workload may be more important than objective workload requirements.

There is a need for more collaboration among the specialists of the manpower, personnel, and human factors domains to ensure that the studies that are undertaken meet the requirements of all these stakeholders. Suitable shared representations are not well developed. Workload models can produce PERT chart–like representations that are useful for detailed analysis of operational concepts, but the output of most workload studies is simply an experiment report. New visualizations are required that are grounded in data but that present it in a form that allows all stakeholders to understand not only what the recommendations are, but also how they are supported by the data.

PARTICIPATORY DESIGN

Overview

The preceding sections of this chapter have emphasized design as conducted by professional designers and engineers. This section focuses on design as a hybrid activity (see, e.g., Muller, 2003) conducted by professionals and end-users together, as co-designers. Much of the background for these concepts was provided in the participatory analysis section of Chapter 6. We restrict the discussion here to design-related concepts within that more general framework.

The principal focus of participatory design has been twofold (Blomberg et al., 2003; Bødker et al., 2004; Greenbaum and Kyng, 1991; Kyng and Matthiassen, 1997; Muller, Haslwanter, and Dayton, 1997; Muller and Kuhn, 1993; Schuler and Namioka, 1993):

-

To present design options clearly and understandably to end-users and

-

To provide the means for end-users to make changes in those design options.

This overall philosophy means that end-users are more involved in design and development than is the case in conventional treatments, in which end-users tend to be consulted during requirements elicitation, and again during usability or acceptance testing. By contrast, participatory design typically involves iterative engagements with users as first-class participants at multiple, strategically chosen moments during the specification-design-evaluation processes. When appropriate, this approach supplements the knowledge of engineers and professional designers with the work domain

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

knowledge of the end-users themselves, for a better informed, more efficient development process that typically requires fewer iterations to achieve targeted levels of usability, user satisfaction, and user acceptance.

Participatory design work has focused on issues of theory, context, and practice (for a summary, see Levinger, 1998). In this report, we focus on six sets of practices that have been shown to provide sustained value in system development (for encyclopedic reviews of over 70 participatory practices, see Bødker et al., 2004; Muller, 2003; Muller, Haslwanter, and Dayton, 1997).

Methods and Shared Representations

Scenarios

The analysis phases of scenario-based methods are noted in the previous chapter (Carroll, 1995, 2000; Carroll, Rosson, and Carroll, 2002b, 2003). These activities continue in design. One of the strongest ways to describe a revised or new design is through a story of that design in use. Scenario-based design is based around such stories. Scenario-based design builds on the problem statement through the following steps:

-

A set of activity designs (literally, action-oriented scenarios of future use) are constructed and evaluated with end-users. The claims from the previous step (i.e., assertions of value to the end-users) can be used to structure the evaluation.

-

An information design is proposed, based on the approved activity designs. Each activity design becomes a reference model for the evaluation of each information design. The information design provides a more detailed perspective on the narrative of the activity design, and is itself a more refined scenario of future use. Again, the claims from the participatory analysis can be used to structure the evaluation.

-

A more detailed interaction design is developed, based on a refined and stabilized information design. Each interaction design is an even more refined and developed scenario of future use. The action designs remain the reference models against which the interaction design is evaluated—again with the potential aid of the claims from the participatory analysis.

In these ways, scenario-based design produces a structured series of narratives, each focused on resolving particular questions. The scenarios remain intelligible and accessible to the end-users, who are encouraged to critique and modify them as needed.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

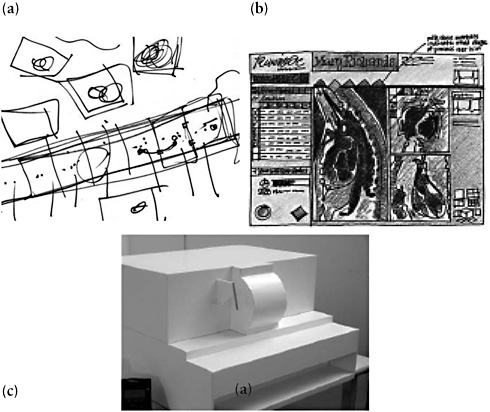

Low-Technology Representations

Another powerful way to tell a story about future use is through enactment of that scenario using tangible materials, such as prototypes of the envisioned technology. If the technology has been completed, then this approach becomes a matter of formative or summative usability evaluation (see Chapter 8). However, in participatory design, the prototype is often left strategically incomplete to encourage and even to require users to contribute their ideas directly to the evolving concept.

One of the most powerful forms of strategic incompleteness is to make a nonfunctional prototype out of low-technology materials (Bødker et al., 1987; Ehn and Kyng, 1991; Muller, 1992; Muller et al., 1995). This approach has several advantages. First, it is easy to produce, and that means that it is easy to revise or abandon (an extreme version of the concept of "throwaway prototype"). Second, it is easy to modify in place—a form of user-initiated design.1 Third, modification of the low-tech representation requires no specialized tools other than domain knowledge. Thus, a low-tech representation becomes another means for leveling the playing field, encouraging end-users to make egalitarian contributions of their knowledge to complement the knowledge of software and design professionals.

Bødker et al. (1987) provided early demonstrations of the value of low-tech mock-ups ("cardboard computers") in critique and redesign of new technologies for newspaper print shops. Muller (1992) provided an evolutionary view of paper-and-pencil materials and associated working practices in the design of user interfaces. Lafrenière (1996) showed a more macroscopic approach involving user-initiated construction of storyboard scenarios through the use of strategically incomplete storyboard frames (see also Muller, 2001). An integration of several of these approaches became a more formal description of proven "bifocal tools" for participatory analysis and design (Muller et al., 1995).

Low-tech representations have the additional advantage of being a form of literal requirements document. That is, the constructed form of the representation is a first approximation of the intended final design of the user interface. In the course of working with the low-tech representation, users and systems professionals usually enact or review one or more

| 1 | Note that the use of low-tech materials for design is quite different from the use of low-tech materials for evaluation, as advocated in contextual inquiry and design (Holtzblatt, 2003; Holtzblatt et al., 2004) and in Snyder's paper prototyping approach (2003). These latter approaches describe the use of a low-tech prototype as a valuable proxy for a functioning system in usability testing. However, by using the materials for usability testing, these approaches effectively reduce the user's input into an acceptance test. In participatory design, the goal is for the users to contribute as peer co-designers, not simply as evaluators. |

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

scenarios of use. The sequence of events in this scenario (often captured in the form of a video recording—e.g., Muller, 1992; Muller et al., 1995) is a first approximation of the user experience and of the user-experienced information design and information architecture that must also be built. In these ways, the simple paper-and-pencil (or cardboard) materials can become powerful engines for explicating and enhancing designs.

Theatrical Approaches

The strategy of acting out a use scenario has been another tool of participatory design. Using the theoretical foundation of Boal's theatre of the oppressed (Boal, 1992), participatory designers have staged dramas to elicit discussion of working practices and technology alternatives. The principal method in information technology (e.g., Ehn and Kyng, 1991; Ehn and Sjögren, 1991) has been Boal's forum theatre, in which the designers present a skit with an undesirable outcome and challenge the end-users to modify the script, the props (i.e., the technology), or the setting, and then to reenact the drama, until the outcome is better. A secondary method in information technology (e.g., Brandt and Grunnet, 2000) has been the practice of "frozen images" or tableaux, in which the actors in a drama are asked to stop ("freeze") while the audience asks each actor what her or his character was trying to achieve, what obstacles she or he faced, and how the situation or circumstances should be improved.

As video technology has become a consumer product, users have also become authors of videos to show current work problems and proposed solutions (Björgvinsson and Hillgren, 2004; Buur et al., 2000; Mørch et al., 2004). An explicit tie-in to scenario-based methods was made by Iacucci and Kuutti (2002) in their work on "performing scenarios" (see also Buur and Bødker, 2000).

Ethnographic Methods

Ethnography has figured prominently in the literature on participatory design (e.g., Blomberg et al., 1993, 2003; Mogensen and Trigg, 1992; Suchman, 1987, 2002; Suchman and Trigg, 1991; Trigg, 2000). The specific methods used by ethnographers in design activities tend to invoke other methods, previously described in the section on participatory design. For broader discussions of ethnography, see Chapter 6.

Workshop Methods

Preceding sections have described the use of stories and scenarios, low-technology representations, and user-produced documentaries as methods

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

and materials for participatory analysis. These and other methods have been integrated in the generative workshops of Sanders and colleagues (Sanders, 2000). Generative workshops consist of methods from market research (e.g., focus groups to elicit users' comments), ethnography (observation of users engaged in work), and participatory design (construction of anticipated or desired future objects through low-technology prototyping). The goal of this conjoint "say-do-make" approach is to triangulate on important user needs, working practices, and innovations.

Contributions to the System Design Process

Each participatory design method produces its own characteristic shared representation and contribution; several of these were reviewed in the preceding chapter on analysis. Table 7-2 provides a summary of contributions and shared representations.

TABLE 7-2 Summary of Contributions and Shared Representations in Participatory Design

| Method | Role in System Development Process | Shared Representation and Use |

| Participatory scenarios | Design in use (actual use or future use) Ongoing opportunity to revisit opportunity analysis and context of use | Layered design documents (activity design, information design, interaction design) Stories, storyboards, narratives |

| Low-technology representations | Early designs Design alternatives Throwaway prototypes | Designs Artifacts created during the design process Informal requirements |

| Theatrical approaches | Consequences of designs for work practices Design alternatives | Informal reports Scripts (rare) |

| Workshops, especially generative workshops | Opportunity to revisit context of use and opportunity analysis Designs Design alternatives Consequences of designs for work practices | Designs Artifacts created during the design process Early marketing insights |

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Shared Representations

In brief recapitulation, scenario-based methods may produce stories, storyboards, narratives, and use cases; the latter are particularly useful for systems engineering. These materials can become background or reference material for the more detailed work of designers and developers. Alternatively, a more detailed scenario can develop into use cases, which directly inform design and development on an event-by-event (or action-by-action) basis.

Low-technology representations provide first drafts of user interface designs and are suitable inputs to the work of professional designers; the information surrounding them is valuable to resolve questions that designers and implementers might have about why certain features are needed and for what purpose. In addition to the first draft approach, low-technology representations can become detailed design documents, ready for implementation into working hardware or software.

The theatrical methods are similar to the multimedia documentary methods in the preceding chapter. As with the narratives and explanations surrounding a low-technology representation, the additional information in a theatrical method may provide useful contextualization of design recommendations and implementation decisions.

The workshop methods are similar in outcome to the theatrical methods, with the difference that the workshop methods were designed by professional designers to be used by professional designers. Their outcomes are thus structured to be useful inputs to the next, more formalized design steps.

Strengths, Limitations, and Gaps

Strengths and weaknesses of participatory design are similar to those for participatory analysis, as discussed in Chapter 6. A principal strength of the participatory approaches is the collection and use of detailed, in-depth information from the users' perspective. As discussed above, users have access to a different kind of knowledge from that of systems professionals, and the users' knowledge can be very valuable for informing design with the realities of how the work gets done, as well as for defining new opportunities and understanding the context of use (Chapter 6). A second principal strength is the growing body of practices for combining the users' knowledge with the knowledge of design and implementation professionals (and other professionals) through well-understood methodologies.

There are two principal weaknesses of the participatory approaches. The first is a matter of appearance. Participatory approaches involve knowledge holders who have historically been undervalued in systems develop-

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

ment, and therefore the participatory design may be required to justify this "unusual" approach to more traditional practitioners and management. Similarly, the strategic informality of the participatory approaches may present an appearance problem—i.e., the use of low-technology, narrative, and expressive media that are so necessary for full and effective communication across disciplinary boundaries.

The second principal weakness of the participatory approaches is that it is sometimes difficult to integrate their informal, open, "soft" outcomes with the kinds of precise knowledge that are typically required as inputs to downstream systems development activities. This problem is rapidly becoming a nonissue, through the integrative methodologies pioneered by Kensing and Madsen (1993), the integrations with formal methods proposed by Muller, Haslwanter, and Dayton (1997), and the development of a participatory information technology methodology (Bødker et al., 2004).

CONTEXTUAL DESIGN

In the participatory analysis section of Chapter 6, we summarized the contextual inquiry process, including the three activities of contextual inquiry, interpretation, and affinity analysis, as well as the construction of the five models characterized, respectively, in flow, sequence, physical, cultural, and artifact terms. Contextual inquiry can lead in turn to contextual design (Holtzblatt, 2003; Holtzblatt et al., 2004), which includes the following activities:

-

Visioning and storyboarding: Develop new concepts and concretize them in the form of stories ("visions"). Iteratively refine these concepts via storyboards.

-

User environment design: Develop an abstract version of the structure and function clustering of the system's components and operations independently of the user interface and implementation (Holtzblatt, 2003, p. 943).

It is interesting to note that the stories and storyboards are accessible to end-users, whereas the larger components of the visioning and user environment design activities are explicitly stated to avoid issues of user interfaces or user experiences. Thus, while contextual inquiry involved a major component of user participation in analysis, much of the work of contextual design focuses more on the product team and its professional staff, returning to the users for a more traditional usability evaluation (see Chapter 8).

Contextual design has been designed to be well integrated into a flow of work beginning with contextual inquiry and proceeding into development.

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

The shared representations of contextual design (see above) are structured and sized for immediate uptake by systems engineers and professional designers.

Contributions to the System Design Process

Contextual design has been developed for effective transfer of knowledge from designers to other systems professionals. The form of storyboarding used in contextual design is intended for rapid uptake (as in use cases), and the structure and function clustering is one of the principal outcomes of a requirements analysis, to assist other systems professionals in making choices in function allocation.

Shared Representations

Contextual design is intended to produce formal requirements and specifications. The vision statements and descriptions of current or future end-user work environments are inputs to those more formal documents.

Strengths, Limitations, and Gaps

As noted in the preceding chapter, the contextual inquiry and design methods involve more research time and more meeting time than some less formal methods, such as participatory design. We proposed in that chapter that there is a straightforward trade-off between the need for informal and open methods that maximize the contributions of end-users (with their own unique knowledge) versus more formal and closed methods that maximize the subsequent uptake by the development team.

PHYSICAL ERGONOMICS

Overview

Physical ergonomics is concerned with human anatomical, anthropometric, physiological and biomechanical characteristics as they relate to physical activity.2 Complex and simple systems often require both cognitive and physical activities of the user or group of users. Clearly, it is best to design an ergonomically correct system in the early stages of system design (Kroemer, Kroemer, and Kroemer-Elbert, 2001), and ideally a formal

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

institutionalized process for incorporating ergonomics into system design preexists. The steps in the overall ergonomic process are (1) organization of the process, (2) identifying the problem, (3) analyzing the problem, (4) developing a solution, (5) implementing the solution, and (6) evaluating the result (Kilbom and Petersson, 2006).

In ergonomics, the philosophy behind the methods is one of prevention and designing the system to minimize risk factors. Without such a proactive, planned approach, the human cost can range from mild discomfort to cumulative trauma or injury and possibly even death. It is therefore a serious matter to consider the human user's physical limitations and capabilities when designing systems. The major ergonomic considerations for healthy, safe, and efficient workplaces and environments are worker task position (reach, grasp, lines of sight, work heights, etc.), posture (seated and standing), clearances (access, movement space, activity space), machine control (visibility, control dimensions), force application (allowable forces), workstation layout (display and control positions and relationships), and physical environment (lighting, noise, climate, vibration, radiation, chemical, psychosocial, spatial, etc.) (Wilson, 1998). Anthropometric (and other) data for ergonomic design in new system design can be found in several published military and civilian guidelines and standards. Human digital modeling is another excellent way to test design alternatives. In addition, controlled testing and laboratory experimentation (e.g., fitting and user testing) can be used to empirically optimize ergonomic design.

In physical ergonomics, concern for the user ranges from perceived discomfort to physical injury. Assessment methods can be used to identify prospective problems in existing systems or for evaluating alternatives in new systems. Using one class of physical ergonomics issues as an example, musculoskeletal injuries often begin with users experiencing discomfort (Hedge, 2005). Left untreated, these perceptions of discomfort can escalate into pain. Untreated pain can then result in musculoskeletal injury (e.g., tendonitis, tenosynovitis, carpal tunnel syndrome) (Hedge, 2005).

Finally, there has been an effort to automate the tools with which physical ergonomics is considered in the design process. Digital human models are ergonomic analysis and design tools that are intended to be used early in the product and system development process to improve the physical design of systems and workstations (see section on models and simulation).

Shared Representations

Shared representations range from physiological tests, such as the measurement of systolic blood pressure, to subjective instruments, such as ratings of perceived exertions (Louhevaara et al., 1998). Physical ergonomics methods that focus on assessing discomfort center around self-report in-

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

struments. Such shared representations have the downside of subjectivity. In the assessment of posture, direct observation can be used, with shared representations taking the form of checklists and other data-acquisition and -reduction tools. Fatigue assessments, while attempting to be quantitative, do rely on subjective ratings, and thus shared representations take the form of the output of rating-based instruments. Finally, methods to assess physical risk also tend to rely on shared representations that are at least partially subjective—typically taking the form of checklists and rating scales. With respect to human digital modeling, an avatar or virtual human with specific population attributes is rendered as it dynamically performs tasks in a system. More simply, a dynamic simulation of the human-system interaction is rendered. More detail on the shared representations, including examples, follows in the context of methods.

Uses of Methods

Methods for Assessing Discomfort

In addition to discomfort serving as an early warning sign for injury, discomfort can in and of itself be costly in terms of affecting the quality or quantity of work performed (Hedge, 2005). Since discomfort is not directly assessed and must be perceived by the user, methods for assessment involve self-report instruments. One of the earliest methods to assess a user's degree of musculoskeletal discomfort is a checklist instrument called PLIBEL (Hedge, 2005). This literature-derived instrument allows users to evaluate ergonomics hazards associated with five body regions (see Kemmlert, 1995). The assessment can be applied at the task or system level. In the context of system development within an HSI framework, PLIBEL can help identify specific bodily areas that require attention in design or redesign. For example, if excessive reaches or awkward postures are required by a newly designed jet cockpit "highway in the sky" display, PLIBEL will identify the physical regions of the body at risk.

Another group of discomfort instruments to consider in physical ergonomics assessment is that promoted by the National Institutes for Occupational Safety and Health (NIOSH) (Hedge, 2005). Self-report measures of discomfort are widely accepted by the agency (see Sauter et al., 2005). Most of these instruments share the characteristics of combining body maps with questions and, like PLIBEL, attempt to identify particular body regions at risk.

Additional methods for assessing discomfort include the Dutch Musculoskeletal Survey, the Cornell Musculoskeletal Discomfort Survey, and the Nordic Musculoskeletal Questionnaire (Hedge, 2005).

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×

Methods for Assessing Posture

Workplace posture is a function of the interaction of many factors, including workstation design, equipment design, and methods (Keyserling, 1998). As indicated by Hedge (2005), there are various reasons why self-report instruments are less desirable than unobtrusive observations of, for example, posture. Posture in a sense is a surrogate for musculoskeletal functioning. In system development, users in mock-ups or users in existing systems can be evaluated in real time or through recordings to assess postural risk. The Quick Exposure Checklist involves both observer and user assessments. Its exposures scores are derived (in percentages), and actions ranging from "acceptable" to "investigate and change immediately" are recommended (Li and Buckle, 2005, 1999). The Quick Exposure Checklist can therefore be applied to assessing risks associated with system tasks when evaluating an existing system for redesign or when testing a prototype of a new system.

A widely used method, called rapid upper limb assessment, provides a rating of musculoskeletal loads (McAtamney and Corlett, 1993, 2005). These ratings relate to the posture, force, and movement required by tasks. After postures are selected, they are scored using scoring forms, body part diagrams, and tables. The scores are converted to actions ranging from "acceptable" to "immediate changes required." For tasks that relate to additional body parts, the rapid entire body assessment method can be used. Additional methods include the strain index, the Ovako working posture analysis system, and the portable ergonomics observation method (Hedge, 2005).

Methods for Assessing Fatigue

The previously mentioned methods do not really address the measurement of work effort and fatigue. Methods that attempt to quantify effort and fatigue include the Borg Ratings of Perceived Exertion scale and the Muscle Fatigue Assessment method (Hedge, 2005).

The Borg ratings increase linearly with oxygen consumption, whereby a range of 6-20 was established for healthy, middle-aged people (Borg, 2005). The scale provides a measure of exertion intensity and thus provides quantitative data when evaluating a system or proposed system that requires physical user demands. One limitation is that while quantitative, the scale does rely on perceived exertion.

Strategies for reducing risk can be pursued after defining the level of effort required. The Muscle Fatigue Assessment method works best when applied to production tasks having less than 12-15 repetitions/minute with the same muscle groups and is ideal for team evaluations of a task (Rodgers,

Suggested Citation:"7 Defining Requirements and Design." National Research Council. 2007. Human-System Integration in the System Development Process: A New Look. Washington, DC: The National Academies Press. doi: 10.17226/11893.

×